Share

HDX was publicly launched at the Open Knowledge Festival in Berlin in July 2014, featuring 800 datasets shared by over a dozen organizations. Fast forward ten years and HDX is an integral platform for the humanitarian data community: 206 organizations are sharing over 20,000 datasets, covering every active humanitarian crisis, with users in 230 countries and territories.

The concept behind HDX – open data aggregation – was not a new idea. HDX was formed at the peak of the open data movement. Actors spanning multiple sectors had come together to challenge the conventions of information sharing and demanded a departure from the era of closed data systems. They advocated for data to be open: freely available for anyone to use, reuse, and redistribute without restrictions, subject only to attribution. The World Bank’s open data initiative was a key inspiration for HDX, providing access to data that had previously been behind a paywall.

Before HDX, the humanitarian data landscape was fragmented and opaque. Humanitarian data was hard to find, and there was a lack of clarity around whether data could be trusted or reused: Was it the latest version? Was there any metadata about the source and data collection methodology? Was there a license? In the humanitarian sector, getting access to data, and answers to questions, depended to a large extent on personal connections.

Enter HDX

OCHA created HDX with the goal of making data easy to find and use for analysis, in line with its coordination mandate. Our theory of change was that, by providing open and accessible data, HDX could lower the barrier to entry for data use, in turn improving data-informed decision making in humanitarian response.

While the willingness of humanitarians to share data preceded the open data movement, data was not commonly shared in a manner that maximized opportunities for reuse. To realize our ambition, HDX had to drive behavior change, create incentives and reduce friction for data sharing across the sector.

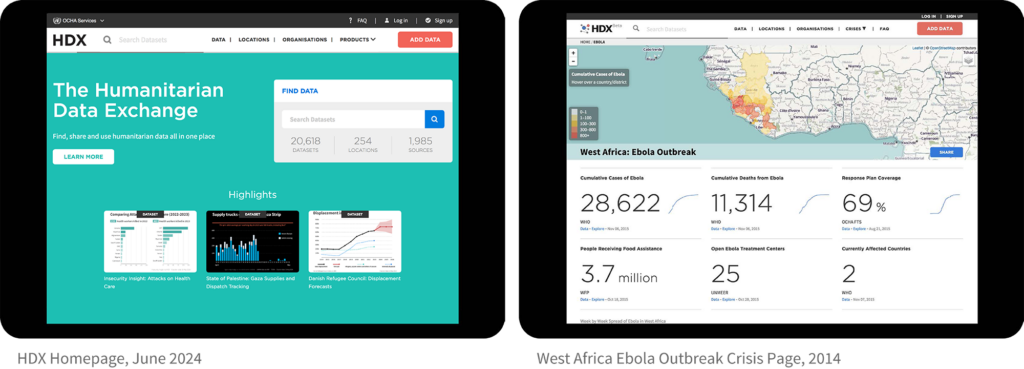

A clear source of friction, for example, was the dependency on PDF formats for reporting on crises. In 2014, shortly after the launch of HDX, the Ebola outbreak in West Africa was intensifying. Timely information on the location of Ebola cases and deaths was essential to limit the spread of the disease. This data was made available through daily situation reports published in PDFs by WHO. We manually extracted the data from these reports into a machine-readable file that was shared on HDX and sourced to WHO. The Ebola cases and deaths data became the most popular dataset on the platform, proving the value of open data. The New York Times used it to create a visual of the outbreak that ran on their homepage, reaching millions of readers.

The Ebola crisis was a test case for a number of data services that HDX continues to offer today. We created our first crisis page to help non-technical users understand trends across locations and sources. Around this same time, WFP started sharing their food price data for Ebola-affected countries in West Africa on HDX. In exchange, we visualized their data and created the first custom organization page. These services – a crisis page, a branded organization page and data visualization – incentivized data sharing by other organizations and drove adoption of the platform.

“HDX was the first time datasets were brought together in an easy and consumable way for humanitarian purposes. The data was clean and from a source that could be trusted. By placing data into one central space, users can now connect different sources of information to conduct comprehensive analysis. I believe HDX is one of the core reasons why humanitarians started using data.”

-Rohini Swaminathan, Head of Geospatial Support Unit, World Food Programme

Ten Years of Growth

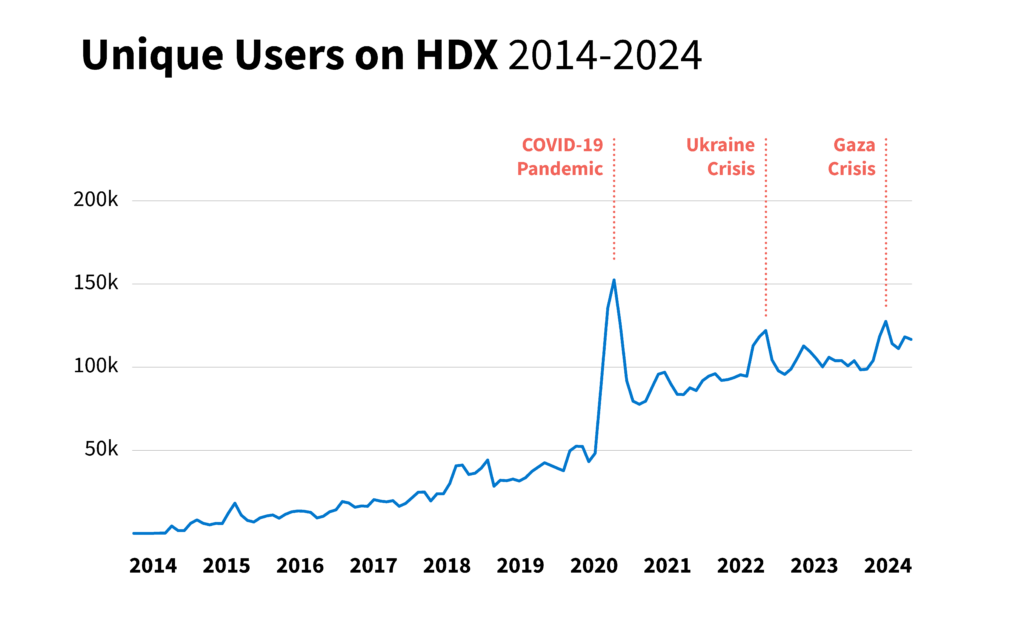

HDX has seen significant growth over the last ten years, reaching around 130,000 unique users per month in December 2023. Global crises drive peaks in use, as we have seen with the COVID-19 pandemic, Ukraine war and hostilities in Gaza.

The top user locations have consistently included the United States, United Kingdom, Germany, Kenya, Bangladesh and the Philippines. In locations with humanitarian operations, Ethiopia, Nigeria and Ukraine have the most use. User growth has increased more in HRP locations relative to non-HRP locations over the past ten years.

Our early research in 2014 found that one of the key determinants for how much a user trusted a dataset was based on the organization it came from. We have since worked with hundreds of organizations to help them establish a data sharing presence on HDX.

Our early research in 2014 found that one of the key determinants for how much a user trusted a dataset was based on the organization it came from. We have since worked with hundreds of organizations to help them establish a data sharing presence on HDX.

Big Shifts

Data usage in the humanitarian sector has evolved significantly over the last ten years. For its part, HDX has led an important shift in driving the centrality of data in humanitarian response. We have also had a front row seat to the big shifts with data in the sector. We highlight a few below.

A shift to responsible data

The call for open data has evolved, in some cases, to ‘share and protect’ data, based on a more complex understanding of the relationship between data and humanitarian response. Over the last decade, as technological advances have made data management easier, humanitarian organizations have faced a new challenge: how to balance the benefits of widespread data sharing with the risks of disclosing potentially sensitive information about people in crisis.

At the time of HDX’s launch, there was limited guidance on the management of sensitive data in humanitarian contexts. By 2021, the first system-wide IASC Operational Guidance on Data Responsibility in Humanitarian Action had been endorsed, outlining eight actions for the safe, ethical and effective management of data. This includes the development of Information Sharing Protocols, which govern data and information sharing in an operation.

HDX responded to this shift by introducing HDX Connect, a feature that enables organizations to share only metadata while the underlying data is available by request. We also introduced methods for disclosure control of datasets, such as needs assessments, that may have a high risk of re-identification of people.

A shift to programmatic use

Humanitarian organizations have advanced in their technical capacity in recent years and the use of APIs is growing. As a result, the scale and predictability of humanitarian datasets have greatly improved.

Sixty-eight percent of the 20,000+ datasets on HDX are shared programmatically through APIs, reducing the need to manually add and update individual datasets. This shift to using data pipelines is a sign of increased sophistication among our data partners. The same can be said for a majority of HDX users who want to access data through frictionless services rather than by going to the site to download files. We expect this trend toward automation to continue.

Although positive, this comes with the risk of further fragmentation of the humanitarian data ecosystem, overwhelming data managers and developers with too many systems to learn in order to access the data they need. HDX will leverage its role as a central platform to provide the most essential data in a common system, simplifying programmatic access to data. This new ‘humanitarian API’ will provide a consistent, standardized and machine-readable interface to query and retrieve data from a set of high-value indicators, starting with those in the HDX Data Grids.

A shift to insight

More humanitarians are using data in their daily workflows than ever before. However, access to data is only as powerful as the insight it provides. As such, conducting analysis is a crucial part of humanitarian data efforts and this work is set to accelerate in the coming years.

When HDX started, we supported data contributors by making a visual of one of their datasets. Then we began to combine dozens of datasets on HDX to create broader situational awareness of a crisis, such as with the data explorers for COVID-19 pandemic and the war in Ukraine. Now we are using data and forecasts to predict the impact of a crisis and trigger action before it unfolds. These impact models rely on climate data, satellite imagery, and novel sources such as building footprint data from Google Research and wealth indices from Meta, all of which are available on HDX.

Advances in AI will enable analysis at a previously unattainable scale, supporting the shift towards proactive approaches in the humanitarian sector. AI has the potential to drive operational gains for humanitarians through enhanced efficiency in routine tasks like report drafting and data formatting. The scale and speed of digestion and analysis generated by AI tools could markedly increase the capacity for large-scale humanitarian analysis.

The Future of HDX

In contrast to ten years ago, data is now firmly on the agenda of the United Nations. The Secretary-General’s Data Strategy, created in 2020, is committed to unlocking “‘the data potential of the UN family to better support people and planet.” Demand for better data and analysis has grown in the humanitarian sector, as it contends with unprecedented needs and insufficient funds. Over 300 million people are in need of humanitarian assistance in 2024. In this context, data is more powerful than ever, and the stakes have never been higher.

The future of HDX will meet the changing needs of humanitarians, leveraging advances in emerging technologies to do so. Our product roadmap builds on the progress of the past ten years, and creates new opportunities for faster access to reliable data and insight about humanitarian crises. We will evolve the HDX platform to be more sophisticated in its processing of data at scale with a continued focus on data quality.

Trusted partnerships with individuals and organizations in the humanitarian community will always be at the forefront of our work. Through HDX, and the Centre’s broader objectives, we hope to drive another decade of increased use and impact of data in humanitarian response.

This blog was adapted from an article on ten years of HDX in The State of Open Humanitarian Data 2024. Please be in touch with questions or comments at hdx@un.org.